Before we understand what is Prompt engineering it is important to understand some basic terms: You can also watch our complete prompt engineering course for free click here to watch it.

This blog will guide how to Automate your task with Prompt Engineering & ChatGPT or LLMs. Basic to Pro Guide. Read the complete guide.

Basic Terminologies For Prompt Engineering are:

What is AI

AI or Artificial Intelligence is the field where we try to make computers think, learn, and understand like humans, so they will be capable of writing, creating content, solving complex problems, drawing, and even coding and programming.

like giving machines a piece of our brain but with less forgetfulness and no coffee breaks😅

What is NLP?

NLP, or Natural language processing, is a field in AI where we train and make computers understand human language. So if we ask it a question, it understands and replies.

🧐 NLP is the reason why you can ask Siri about the weather or why your email can filter out spam.

Imagine trying to understand a language from another planet. That’s NLP for computers! 🌐

What is GPT?

This is the powerhouse behind many AI applications you see today.

GPT, or Generative Pre-trained Transformer, is an NLP AI model.

The idea is simple, in AI, we train the computer to do a certain task, and when we finish, we call the output an AI model.

Here, GPT is the name of the NLP model that is trained to understand human language. We have multiple versions like GPT-2, GPT-3, 3.5, and 4 that are used by ChatGPT.

What is LLM?

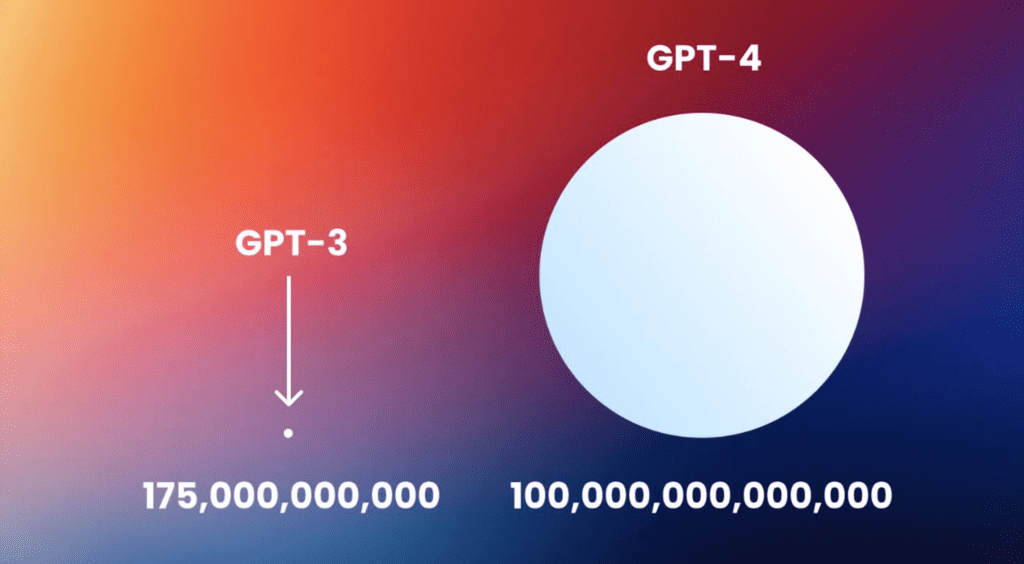

We use this term a lot in prompt engineering. It is an abbreviation of the Large Language Model. Like GPT 3 or 3.5. that has 175 billion parameters.

GPT is an example of an LLM. Large Language Models are trained on vast amounts of text data, allowing them to generate impressively human-like text.

What are the Parameters?

When we say that GPT-3 has 175 billion parameters, we mean that the model has 175 billion adjustable settings or “knobs” that can be tuned to improve its performance on various language tasks.

So imagine you have a big puzzle that you need to solve, and you have a lot of different pieces that you can use to solve it. The more pieces you have, the better your chance of solving the puzzle correctly.

In the same way, when we say that GPT-3 has 175 billion parameters, we mean that it has a lot of different pieces that it can use to solve language puzzles. These pieces are called parameters, and there are 175 billion of them!

Now, What is Prompt Engineering?

Prompt Engineering meaning is designing and optimizing prompts (the input you give to the LLM) to achieve a specific outcome. It’s learning the language of AI and shaping the conversation to extract the best responses.

Most users of AI, around 90%, casually interact with language models. They ask questions or use prompts found on the internet and get a response. Good enough, but not very deep.

Some users level up, learning to craft better prompts. And using them as templates for their next questions.

Another group leverages ChatGPT pro subscription, using plugins for more power.

Then, there are the 3-5% users, the prompt engineers. They know how to craft powerful prompts and convert them into reusable templates, build their own tools, and automate workflows. These folks don’t just ‘chat’ with the AI; they ‘connect.’ Our goal in this course is to join this top tier. 🏆🔝

And, remember, we’re not just learning to interact with ChatGPT here; we’re mastering the bases of interacting with any LLM.

You might ask, why not simply use the best prompts or plugins available?

While that may yield good results, our goal goes beyond that.

Relying solely on existing tools has limitations and doesn’t cover all possible scenarios. (Examples were mentioned in the video)

As a prompt engineer or while doing Prompt Engineering, you won’t just be someone who writes good prompts. You’ll be much more. Like:

- Automation: Say goodbye to repetitive tasks; you’ll learn to automate them.

- Template Management: Create reusable templates for prompts. It’s like having a personal prompt library!

- Optimization: Master the art of fine-tuning your prompts.

- Customized Workflows: Develop your own unique AI workflows. It’s like building your own AI-powered assembly line!

- Monetization: This skill can open doors for creating AI tools, freelancing, and more.

- AI Project Involvement: Understand, collaborate, and maybe even create your own AI projects. Imagine building the next AutoGPT!

- Developer Aid: Save time as a developer, get help writing, debugging, and explaining code in almost any programming language.

- Security Awareness: Understand vulnerabilities of LLMs, like prompt jailbreaking and injection.

And there’s much more to it! This course is about becoming more than just a user of AI. It’s about becoming an architect of AI interactions. So gear up because this journey will be exciting, enriching, and empowering!

What is a Prompt?

In this section, we’ll explore what a prompt is and its different elements which are important for Prompt Engineering. Let’s get started!

As we already discussed, a prompt is the input text you feed into the LLM (Large Language Model). Think of it as your conversation starter with the AI. Formulating this ‘starter’ greatly influences the response you’ll get from the AI.

Now so Let’s use a simple example to demonstrate this. Suppose you ask an AI model like GPT-3 to write a short story about a bunny.

So If your prompt is “Write a story about a bunny,” the AI might create an interesting story, but it could go in any direction.

However, if your prompt is “Write a heartwarming short story about a brave little bunny named Fluffy, who saved his forest friends from a dangerous fox,” the AI will be better guided to produce the kind of story you’re after. See the difference? 😄🐰

Now that we have a basic understanding of a prompt, let’s dive deeper into its elements.

When crafting a prompt or doing Prompt Engineering, consider these important components: Role, Instruction, Context, Input, Output, and optional Examples.

- Role: Think of this as a role-playing game where you instruct the AI to “act as” a particular character or entity. It could be anything from a detective solving a mystery to a language translator. 🕵️♀️🔍

- Instruction: Here, you’re telling the AI what to do. “Write a poem,” “Answer this question,” “Translate this text,” are all examples of instructions. It’s your ‘command’ to the AI. 💻🗣️

- Context: You provide the background or setting for the prompt. This could include the target audience, the style of response, the time frame, etc.

- Input: This refers to the specific topic or content you want the AI to focus on. this is very important, especially later when you learn about prompt templates.

- Output: How do you want your response? This could be in the form of paragraphs, bullet points, a JSON, an XML, a table, a list, a graph, or any other structure. You can shape the AI’s response as you need. 📊📝

- Example (optional): Providing an example can be useful in some scenarios, as it can help guide the AI’s output. It’s like showing the AI a snapshot of what you want. 📸🌈

Now, let’s take an example using these elements for prompt engineering:

- Role: Act as a world-class chef.

- Instruction: Create a recipe.

- Context: The recipe should be easy to follow for beginners, using everyday ingredients, and should be ready in less than 30 minutes.

- Input: The main ingredient is chicken.

- Output: Provide the recipe in a step-by-step format, with a list of ingredients first, followed by the directions, and ending with serving suggestions.

Prompting Language Considerations 📝🎯

Now that we’ve crafted our prompt and know its essential elements, there’s something equally important we need to discuss – the language of our prompts. It’s not just about what you say, but how you say it. Let’s dive into some tips for optimal prompt language for Prompt Engineering.

- Use plain language: AI is smart, but it appreciates simplicity. Avoid jargon and complicated terms that might confuse the model. The clearer your instructions, the better the AI can grasp your intent. Think of it as talking to a really intelligent kid – keep it simple yet precise.

- Be specific: While being concise, ensure you clearly state your expectations and desired outcomes. Detail is your friend here! It’s like ordering at a restaurant – the more specific your order, the closer the dish will be to your liking.

- Maintain a logical structure: A well-organized prompt is like a well-marked road – it’s easier for the AI to navigate and reach the desired destination. So make sure your instructions follow a coherent order and structure.

- Include examples: Providing examples can be like showing a map to the AI. It can guide the model towards generating results that align more closely with your expectations. It’s like teaching a new recipe – showing a picture of the final dish helps get the desired result. 🥘📸

- Consider the context: The AI system’s capabilities and the platform or model you’re working with should shape your prompt. Be mindful of the strengths and limitations of the AI model. It’s like playing to the strengths of a team member for maximum output!

- Language considerations: Ah, the big debate! 💭🌐 Currently, simple and concise English seems to be the optimal language for interacting with LLMs, given that they’re primarily built on English text. But fear not, non-English speakers! Latest LLMs are increasingly proficient in other major languages too. So, as the field progresses, we’ll be sure to keep you updated on the best practices.

Just like any language, the language of AI prompts is a learned skill.

The more you practice, the more fluent you’ll become. And soon, you’ll be able to ‘speak AI’ like a native! 😄

💡 Remember, the art of communication is not only about knowing what to say but also how to say it. And the same applies to conversing with an AI.

The only limit to what you can achieve with AI is your imagination… and of course, your prompt-crafting skills! 😉So let’s keep enhancing those skills, shall we?

Main Limitation of LLMs

In this section, we’re diving into a crucial topic: the limitations of Large Language Models (LLMs). This limitation are:-

- Based on trained data: LLMs can only provide information based on the data they were trained on. They’re not connected to the internet and can’t access real-time updates or data. For example, ChatGPT last update was in 2021, it wouldn’t know about events that happened afterward. yes, Google Bard may be connected to the internet, or you can use a plugin, but we are talking here LLMs in general usually has this limitation.

- Token limits: LLMs operate on tokens. A token can be as short as one character or as long as one word. For instance, ‘ChatGPT‘ is one token, but so is ‘a.’ You might be wondering about the token limit’s significance. Well, each LLM has a maximum number of tokens it can handle in a single prompt. This includes both the input and output tokens. If you’re trying to get the AI to write a novel in one go, you might hit this limit. 📝🚧Here’s an example: If you ask the AI to summarize a lengthy book in one shot, it might reach its token limit and be unable to deliver the desired result.

- Hallucinations: Sometimes, the AI might generate information that sounds plausible but is incorrect or unverified. This is known as hallucination.

- Connecting with Third Party Services: LLMs, by default, can’t connect to external services for data or actions. For instance, they can’t directly connect to your email to send a message or access a database to pull out specific data.

Does this mean we’re doomed by these limitations? Not at all! In this course, we’ll learn techniques to work around these limitations:

- Connect an LLM to the internet, controlling exactly where and how information is fetched.

- Bypass token limits through smart chunking and chaining techniques.

- Connect LLMs to any third-party service you want, precisely how you want, without restrictions.

- Minimize hallucinations with prompt optimization techniques.

Exciting, isn’t it? Like I always say, with knowledge comes power! 🚀🔥

Model Parameters for Prompt Engineering

If LLMs were pianos, these parameters would be the knobs and pedals that control the tone, volume, and sound effects. 🎹💫

Temperature

This parameter controls the randomness of the AI’s output.

Imagine you’re ordering a pizza 🍕. A low temperature is like asking for “just cheese and tomatoes, please”. You know exactly what you’re going to get – a simple and predictable Margherita pizza. On the other hand, a high temperature is like asking for “chef’s surprise”. You might end up with a variety of toppings, making your pizza interesting, unique, and a little unpredictable!

Similarly, a low temperature in LLMs makes the output more focused and deterministic, while a high temperature makes it more diverse and randomized. A lower temperature (e.g., 0.2) typically produces more focused and consistent text, while a higher temperature (e.g., 0.8) leads to more diverse and creative outputs.

Top_p

Also known as ‘nucleus sampling‘.

To illustrate, let’s imagine you’re at a raffle draw 🎟️. If Top_p is set to 0.5, it’s as if you’re only considering the tickets that have a 50% or higher chance of winning. The rest of the tickets? Nope, they don’t make the cut!

Similarly, in LLMs, Top_p is a decimal between 0 and 1 that determines the cumulative probability from which the model’s next token is chosen. If you set Top_p to 0.5, the AI will only consider the next words which have a cumulative probability of 50% or higher.

Simply put, a lower Top_p value creates more reasonable and fluent text as it selects the next token from a smaller, more probable pool. In contrast, a higher Top_p value can produce more diverse and novel outputs but with a trade-off in fluency and coherency.

Your Homework:

Try out different values of Temperature and Top_p on a simple prompt. Share with us your observations on how different settings affect the output.

That’s all for today! Remember, understanding these parameters is essential to becoming an effective prompt engineer. So, don’t just learn it, live it!

The System Prompt for Prompt Engineering

Well, let’s say you’re a tour guide. The system prompt is your introductory overview that sets the tone and provides the general context for the tour.

Just like a good tour guide, a well-crafted system prompt can steer the AI towards the right path and help it deliver the most relevant output! 🗺️💡

A system prompt is usually a few sentences or a paragraph that helps the model understand the context and the task it needs to perform.

Examples:

- Providing Context: Let’s say we’re trying to generate a friendly conversation about climate change. A system prompt could be something like this:

"You are a friendly and knowledgeable chatbot discussing the impacts of climate change with a curious user."By giving the AI this prompt, you’re setting the tone (friendly), the nature of the conversation (knowledgeable), and the topic (climate change). - Defining Roles: The system prompt can also define the role of the AI. For example, if you want the AI to generate a summary of a book, the prompt might be:

"You are an intelligent AI summarizer. Your task is to provide a concise and clear summary of the following book:"Now, the AI knows its role and what it needs to deliver – a book summary!

Using system prompts effectively can significantly enhance the performance of your model. The more specific your context and instructions are, the better your model can align its output with your needs.

System Prompts for Prompt Engineering in Real-World Scenarios

System prompts can be super handy in various real-world scenarios. Here are a few examples:

- Customer Support: System prompts can guide the model to respond to customer queries efficiently and in a professional tone. And can help narrow down to answer only specific questions. (as we saw in the video)

- Content Generation: Whether you need a blog post, a product description, or social media content, system prompts can ensure your AI generates content that’s on-point and on-brand.

- Educational Tools: When creating AI-powered tutoring systems, system prompts can help the AI provide explanations, solve problems, and even grade assignments!

Remember, it’s all about setting the right stage for AI performance. So, keep your system prompts clear, concise, and contextual!

So hope you guys have understood the basics of Prompt Engineering in this mini blog and this might help you a lot.

Main Part of This Prompt Engineering Course free

Connecting language models with our own scripts to get the real power of language models. For this, you need to learn Python, now if you are from a non-coding background so good news for you.

We created this article ChatGPT Python mini-course guide for you. Here you will only get the knowledge of Python for ChatGPT use only. Which will be enough to take Prompt Engineering to the next level.

Use of Prompt Engineering with ChatGPT & Python

Using ChatGPT & Python we can do tons of things like:-

- Converting YouTube videos to bullet points

- Summarizing YouTube videos to tweets

- Seeing real time data live (which is not possible in LLM default)

- Creating ChatBot

- Summarizing blogs to main points

- Creating applications, etc

Now how you can do such things with LLM models like ChatGPT and Python? You can check out my prompt engineering course free, with this course you can learn how to use prompt engineering ChatGPT with Python to do amazing tasks!

Hope this blog will help you!